Cracking the Code: AI-native Intelligent Document Processing for Medical Records

Healthcare data is as vast as it is complex, encompassing millions of medical records, clinical notes, …

AI models, especially Large Language Models (LLMs), don’t degrade like perishable goods. But over time, you’ll notice the signs—skewed outputs and misalignments with your use case.

As the Head of Data Science at 314e, I’ve seen firsthand how easy it is to stick with a "working" version of an LLM. Stability is important, but in AI, what works today might underperform tomorrow. New and improved LLMs are being released at an almost dizzying pace, each potentially offering significant advantages in accuracy, speed, cost-effectiveness, or specialized capabilities. By clinging to a 'good enough' model, we risk missing out on gains that could drastically improve our solutions and provide a competitive edge.

Recently, we faced a decision: Should we allocate valuable engineering time to migrating from our current v3.1 model to the promising, but untested, v3.2? It wasn’t just about chasing the latest features—it was about performance, risk, infrastructure compatibility, and long-term value.

In this post, I’ll cover:

Think of this as a peek under the hood of how we make technical product decisions—rooted in research, shaped by real-world constraints.

AI models—especially 'fine-tuned' Large Language Models (LLMs)—degrade over time. This phenomenon, known as model decay or concept drift, quietly undermines performance and, if unaddressed, erodes user trust.

The reason is simple: data evolves. Language shifts, behavior changes, and domain knowledge advances. A model trained on yesterday’s information may struggle with today’s context. Even cutting-edge models can begin producing responses that feel off or outdated.

This isn’t just theory. In fast-changing fields like healthcare, finance, and customer service, performance can drop within months. Stale models lose accuracy, introduce bias, and miss critical context, often without obvious warning signs.

Staying relevant doesn’t always require full retraining, but it does demand continuous monitoring, frequent evaluation, and timely updates. Without these, even the best models drift out of sync. Few industries highlight the risks of model decay as clearly as healthcare, where precision is critical.

Imagine an LLM fine-tuned to classify clinical documents—like discharge summaries or lab reports—and extract structured data such as diagnoses, medications, and procedures. It may perform well initially, but as medical standards and data evolve, gaps begin to form.

For instance, the model might have been trained to distinguish between 'Progress Notes' and 'Consultation Reports' based on specific keywords and formatting. This misclassification can have serious consequences if those reports are then routed to the wrong specialists or flagged for the wrong priority. Even subtle shifts in documentation, driven by EHR changes or hospital policies, can break what once worked reliably.

Over time, these issues accumulate:

And in healthcare, these aren’t just errors—they’re risks. Flawed extractions can skew analytics, mislead clinical decision tools, and affect patient outcomes.

In such high-stakes settings, model maintenance is a safety and compliance requirement, not just a technical best practice. Regular updates, strong governance, and retraining pipelines are essential to ensure models stay effective and trustworthy.

This isn’t anecdotal—research confirms the need for ongoing model upkeep:

Neglecting model maintenance can lead to:

A Structured Approach to Maintenance

Organizations like IBM recommend the following practices to stay ahead of drift:

While regular model updates are essential for long-term performance, blindly upgrading to the latest version isn’t always the best move. There’s a trade-off between staying cutting-edge and maintaining system stability, especially in high-stakes or production environments.

Teams often hesitate to upgrade because:

In these cases, staying on a stable version may be safer and more cost-effective, especially when the existing model is well-understood, thoroughly validated, and still performing within acceptable bounds.

Not all updates are created equal. Sometimes, new versions focus on:

Before upgrading, teams should ask:

If the answer to any of these is uncertain, pausing the upgrade might be the right call.

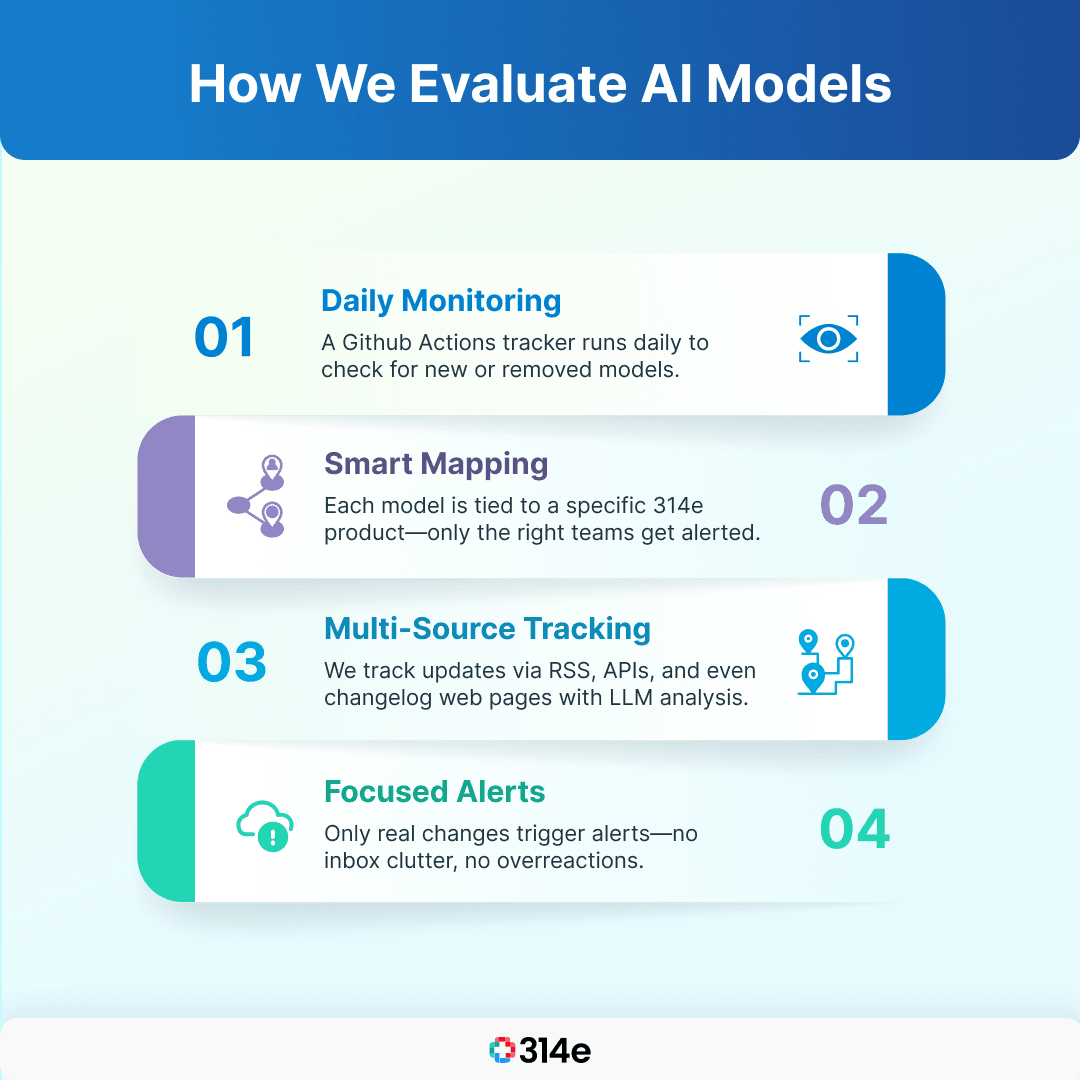

AI model updates can come out of nowhere—often with little fanfare and even less clarity. That’s why we’ve built a systematic, automated process at 314e to stay informed, test with rigor, and upgrade only when it makes real sense.

We monitor new model releases across all major providers using a custom-built GitHub Actions tracker that runs daily. It keeps our teams up to date without flooding inboxes or triggering unnecessary updates.

Each AI model we use is mapped to a specific 314e product. When a new model is released or an old one is removed, a targeted alert is sent only to the relevant stakeholders, ensuring the right teams are notified without spamming the rest.

Depending on the model’s source, we use different mechanisms:

This layered approach gives us full coverage across vendors, minimizes false alarms, and helps us avoid the trap of “panic updating” when nothing truly impactful has changed.

Once an update is flagged, it doesn’t go straight to production. It enters our evaluation pipeline, where we let the data speak.

We test both the new model and the current production model side by side.

The goal? Understand exactly what’s improved, what’s regressed, and what remains neutral.

Key questions we ask before approving any upgrade:

If the results are marginal—or the risks outweigh the gains—we hold off.

This empirical approach keeps us from blindly chasing trends. It ensures:

By combining real-time alerts with rigorous testing, we maintain a fine balance between innovation and operational reliability—making sure every model update is a step forward, not just a leap into the unknown.

Model updates aren't just about performance—they’re also about responsibility. As AI systems shape decisions in healthcare, finance, hiring, and more, staying current isn’t just a technical best practice. It’s an ethical one.

From a practical lens, using outdated models can quietly erode your competitive edge. While your team continues relying on a once-solid model, others may be:

Worse, stale models may fail to spot or adapt to shifting market signals. That could mean missing out on trends, user behaviors, or product opportunities, leaving innovation on the table. In fast-evolving industries, the cost of staying still can outweigh the risk of change.

Ethical standards evolve, just like data. Models trained months or years ago might still carry assumptions or biases that no longer align with today’s norms.

Outdated models can:

For example, a model trained on pre-2023 medical literature might not recognize newer gender-affirming care guidelines or updated diagnostic criteria. In this case, bias isn’t just hypothetical—it directly affects care quality.

Regular updates help address this by:

By keeping models current, we’re not just improving accuracy—we’re ensuring fairness, representation, and social responsibility.

At 314e, we see model updates as part of our ethical obligation to users, patients, and stakeholders. It’s how we:

In short, keeping models sharp isn’t just a technical strategy. It’s part of building AI you can stand behind.

AI model maintenance isn’t just a checkbox—it’s a continuous, strategic decision-making process. And like most things in engineering, it’s about trade-offs.

Stability vs. Innovation. Accuracy vs. Cost. Long-term value vs. Short-term gains.

At 314e, we’ve learned that blindly chasing the latest model release can be just as risky as clinging to an outdated one. The real challenge lies in finding that balance—knowing when to hold steady, when to upgrade, and how to test those decisions rigorously.

Our process is far from perfect, but it’s informed by data, grounded in research, and shaped by real-world constraints across industries like healthcare, where precision and trust matter most.

Whether you're a data scientist, engineer, or product leader, the takeaway is simple:

Model drift is inevitable. But irrelevance isn’t—if you’re intentional about updates.

Stay curious. Stay current. And most importantly, stay responsible.

CONTRIBUTORS BEHIND THE BUILD

Join over 3,200 subscribers and keep up-to-date with the latest innovations & best practices in Healthcare IT.

Healthcare data is as vast as it is complex, encompassing millions of medical records, clinical notes, …

Dexit stands out with its ability to classify documents, extract key entities, and enable seamless …

So, you've deployed your shiny new ML model. It's acing predictions, and life is good. But how do you …